HRNet 针对2D单一人体姿态估计。对于人体姿态估计问题,现有的基于深度学习的方法分2种:

regressing 方式:直接预测关键点的坐标位置heatmap 方式:针对每个关键点预测一张热力图(出现在每个位置上的分数)

HRNet(https://arxiv.org/abs/1902.09212)采取的也是基于heatmap的方式。

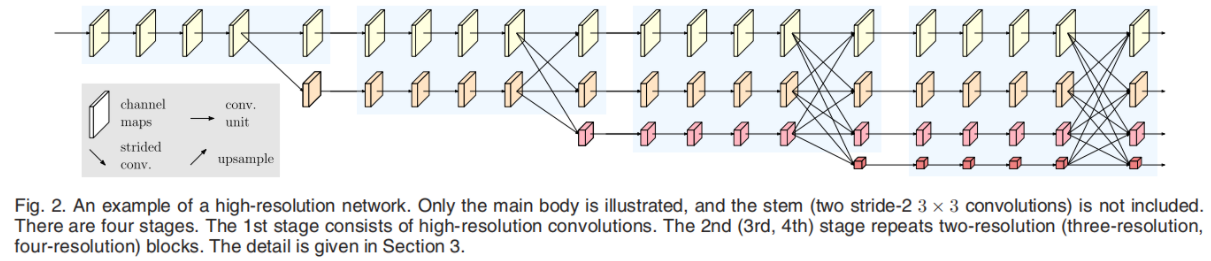

并行连接 高分辨率到低分辨率的子网,而不是像大多数现有解决方案那样串行连接。因此,能够保持高分辨率 ,而不是通过一个低到高的过程恢复分辨率,因此预测的热图可能在空间上更精确。(parallel high-to-low resolution subnetworks)大多数现有的融合方案都将低层和高层的表示集合起来。HRNet使用重复的多尺度融合 ,利用相同深度和相似级别的低分辨率表示来提高高分辨率表示,反之亦然,从而使得高分辨率表示对于姿态的估计也很充分。multi-resolution subnetworks (multi-scale fusion)

HRNet网络结构

· Step1 : 首先通过两个卷积核大小为3x3步距为2的卷积层下采样4倍

· Step2 : 通过layer1模块,重复堆叠Bottleneck,不改变特征层大小,只改变了通道数

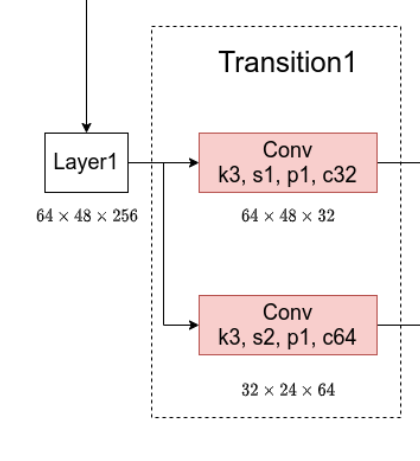

· Step3 : 接着通过Transition1结构,增一个尺度分支。在layer1的输出基础上通过并行两个卷积核大小为3x3的卷积层得到两个不同的尺度分支。(上方s=1,不改变特征层大小; 下方s=2,进一步下采样)

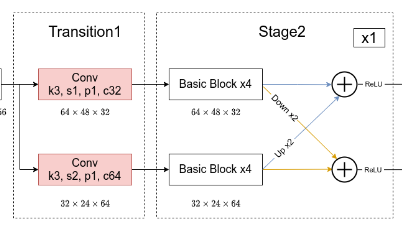

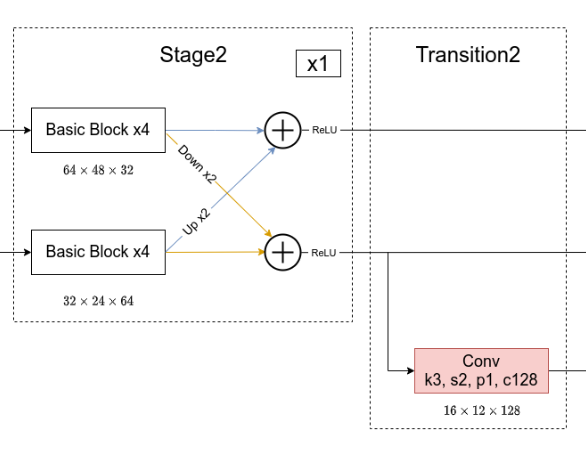

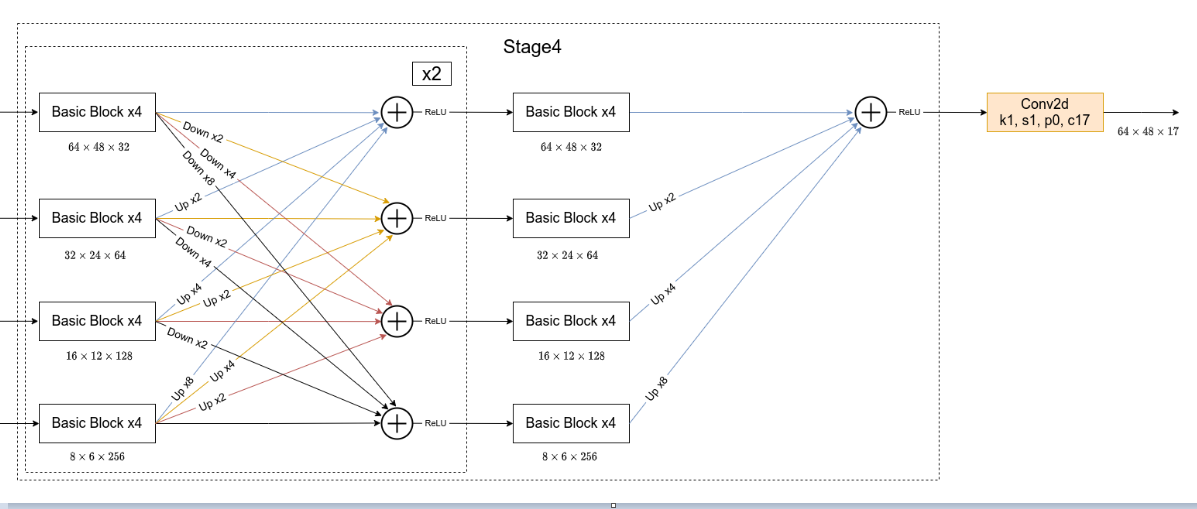

· Step4 : 通过stage2模块,对于每个尺度分支,首先通过4个Basic Block,然后融合不同尺度上的信息。

分支1的输出+分支2的输出上采样2倍 ——>ReLU——>分支1

分支2的输出+分支1的输出下采样2倍 ——>ReLU——>分支2

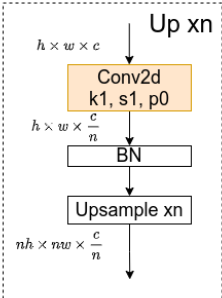

上采样 :先通过 $1 \times 1$的的卷积核,不改变特征层大小,缩减通道数;——>(BN)——>(最近邻插值)——>特征层增大,通道数不变

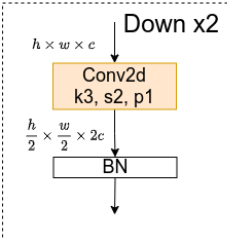

下采样 :通过$2 \times 2$的卷积核,特征层$h,w$减小,通道数增大——>BN (Down×2)

Down×4:通过两个$2 \times 2$的卷积核——>BN

Down×8:通过3个$2 \times 2$的卷积核——>BN

· Step5 : 在Transition2中在原来的两个尺度分支基础上再新加一个下采样的尺度,注意这里是直接在之前尺度基础上通过一个卷积核大小为3x3步距为2的卷积层得到的。之前的分支($32 \times 24 \times 64$)做一个下采样即可。

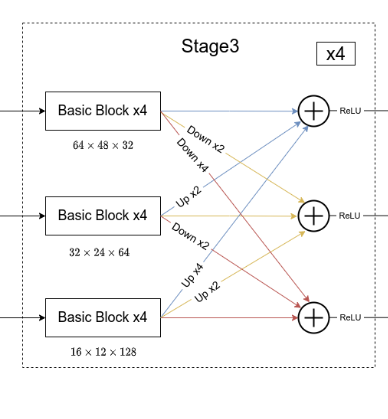

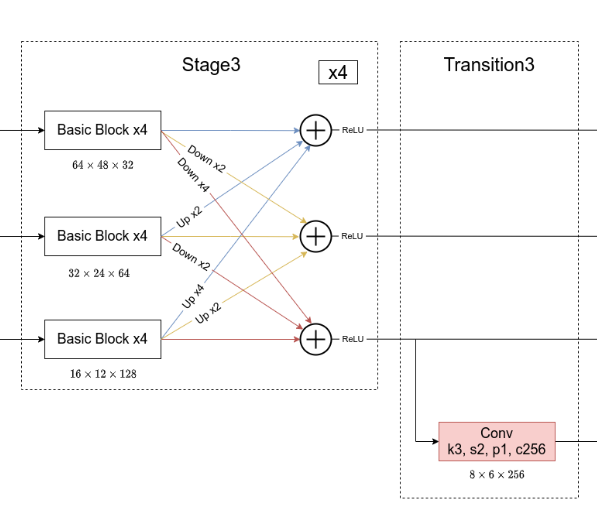

· Step6 : 在stage3模块,对于每个尺度分支,首先通过4个Basic Block,然后融合不同尺度上的信息。对于每个尺度分支上的输出都是由所有分支上的输出进行融合得到的。

· Step7 : 在Transition3中,在原来的3个尺度分支基础上再新加一个下采样的尺度,对最后一个分支做一个下采样即可。

· Step8 : 在stage4模块,对于每个尺度分支,首先通过4个Basic Block,然后融合不同尺度上的信息。(重复2遍)然后,四个分支在通过4个Basic Block,下面三个分支分别进行不同程度的上采样,只返回分辨率最高的分支的输出,再经过一个$1\times 1$,卷积核个数为17的卷积层(COCO有17个关节点),返回最终结果。

损失的计算 针对每个关键点,我们先生成一张值全为0的heatmap,然后将对应关键点坐标处填充1。以关键点坐标为中心应用一个2D的高斯分布(没有做标准化处理)得到GT。利用这个GT heatmap配合网络预测的heatmap就能计算MSE损失了。

数据增强 随机翻转

随机缩放

随机水平翻转

half-body 一定概率对目标裁剪

要注意再图片缩放过程中人物的比例不变!不要直接简单粗暴的拉伸!

代码实现 这里在SMPL模型的3D姿态估计的背景下实现

img——>(HRNet)——>feat_list——>(MLP)——>beta,theta,cam——>(smplx)——>2D_joints

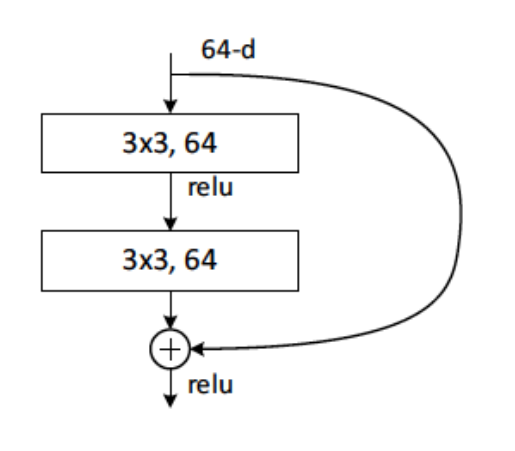

一、前置工作 ==BasicBlock==: 2个$3 \times 3$的卷积块, 在ResNet18、ResNet34中有使用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 def conv3x3 (inchanel,outchanel, stride=1 ):return nn.conv2d(inchanel, outchanel, kernel_size=3 , stride=stride, padding=1 , bias=False )class BasicBlock (nn.Module):def __init__ (self, inchanel, outchanel, stride=1 , downsample=None ):super (BasicBlock, self).__init__()True )def forward (self, x ):if self.downsample is not None :return self.relu(out)

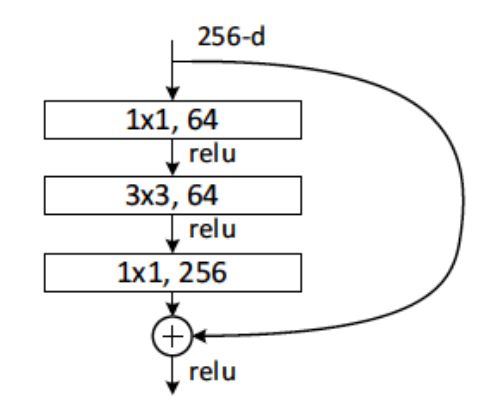

==Bottleneck==:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 class Bottleneck (nn.modules):4 def __init__ (self, inchanel, outchanel, stride=1 , downsample=None ):super (BasicBlock, self).__init__()1 , stride=1 , bias=False )True )def forward (self, x ):if self.downsample is not None :return self.relu(out)

二、HRNet实现 ==make_layer==: 对每个分支做BasicBlock(通道数不变)或者Bottleneck(通道数*4)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 def _make_layer (self, block, planes, blocks, stride=1 ):None if stride != 1 or self.inplanes != planes * block.expansion:1 , stride=stride, bias=False for i in range (1 , blocks):return nn.Sequential(*layers)

==make_transition_layer==: 增一个尺度分支

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 def _make_transition_layer (self, num_channels_pre_layer, num_channels_cur_layer ):len (num_channels_cur_layer)len (num_channels_pre_layer)for i in range (num_branches_cur):if i < num_branches_pre:if num_channels_cur_layer[i] != num_channels_pre_layer[i]:3 ,1 ,1 ,bias=False ),True )else :None )else :for j in range (i+1 -num_branches_pre):1 ]if j == i-num_branches_pre else inchannels3 ,2 ,1 ,bias=True True )return nn.ModuleList(transition_layers)

==make_fuse_layers== :融合不同尺度的信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 def _make_fuse_layers (self ):if self.num_branches == 1 :return None for i in range (num_branches if self.multi_scale_output else 1 ):for j in range (num_branches):if j > i:1 , 1 , 0 , bias=False 2 **(j-i), mode='nearest' )elif j == i:None )else :for k in range (i-j):if k == i - j - 1 :3 , 2 , 1 , bias=False else :3 , 2 , 1 , bias=False True )return nn.ModuleList(fuse_layers)